Generative LLMs

Crash-course LLMs for Social Science

Nicolai Berk

2025-09-12

Recap: Encoder Models (BERT)

- Input: “The [MASK] is barking loudly”

- BERT: Processes entire sentence simultaneously

- Output: probability distribution across tokens (“dog” (87%), “puppy” (8%), “animal” (3%))

- Bidirectional: Reads text in both directions

Masked Language Modeling: Trained to fill in blanks

Recap: Downstream Encoder Tasks

- Classify documents

- Extract information

- Measure similarity

How a Decoder Works

Source: Tunstall, Von Werra, and Wolf (2022)

How a Decoder Works

Source: Tunstall, Von Werra, and Wolf (2022)

- Input: “Cause and…”

- Predicts next token: “effect”

- Repeats until stopping condition

- Autoregressive generation

“Causal” language modeling

Major differences

- Autoregressive: Generates one word at a time

- “Causal” attention: Only looks at previous words

- Massive scale: Often billions of parameters

What does this look like?

Visualization

Take 5-10 minutes to explore the visualization and discuss with your neighbor how the decoder architecture works.

Training GPT

- Pretraining: predict the next word (causal LM objective)

- Scale = data + parameters + compute

- Fine-tuning:

- Instruction tuning (datasets of Q&A)

- RLHF1 (aligning with human preferences)

Decoder Tasks

- Content generation

Surprisingly generalizable task!

- Zero/few-shot Classification

- Code generation

- Translation

- App development

…many more things it was not trained to do!

Inference with LLMs

Prompting

Remember: prompt engineering on the validation set!

Writing a good prompt

- Persona

- Task

- Context

- Format

You are a program manager in [industry]. Draft an executive summary email to [persona] based on [details about relevant program docs]. Limit to bullet points.

Controlling model output

pydantic

Let’s you impose structure on model outputs.

Labelling with LLMs

- Zero-shot: just prompt provided

- Few-shot: a few examples provided

- Dynamic few-shot: examples selected based on similarity to the input

Few-shot labelling example

Your task is to analyze the sentiment in the TEXT below from an investor perspective and label it with only one the three labels:

positive, negative, or neutral.

Examples:

Text: Operating profit increased, from EUR 7m to 9m compared to the previous reporting period.

Label: positive

Text: The company generated net sales of 11.3 million euro this year.

Label: neutral

Text: Profit before taxes decreased to EUR 14m, compared to EUR 19m in the previous period.

Label: negativeDynamic few-shot labelling

Idea: most similar examples should be most informative

- Use cosine_similarity of embedding to assess similarity

- Add k most similar examples to the prompt

Retrieval Augmented Generation (RAG)

- Retrieve most likely examples given a query (e.g. context for question)

- [Optional: rerank generated examples using cross-encoder]

- Use these examples in the prompt to generate an answer

Use-cases: archival research, chatbots, …

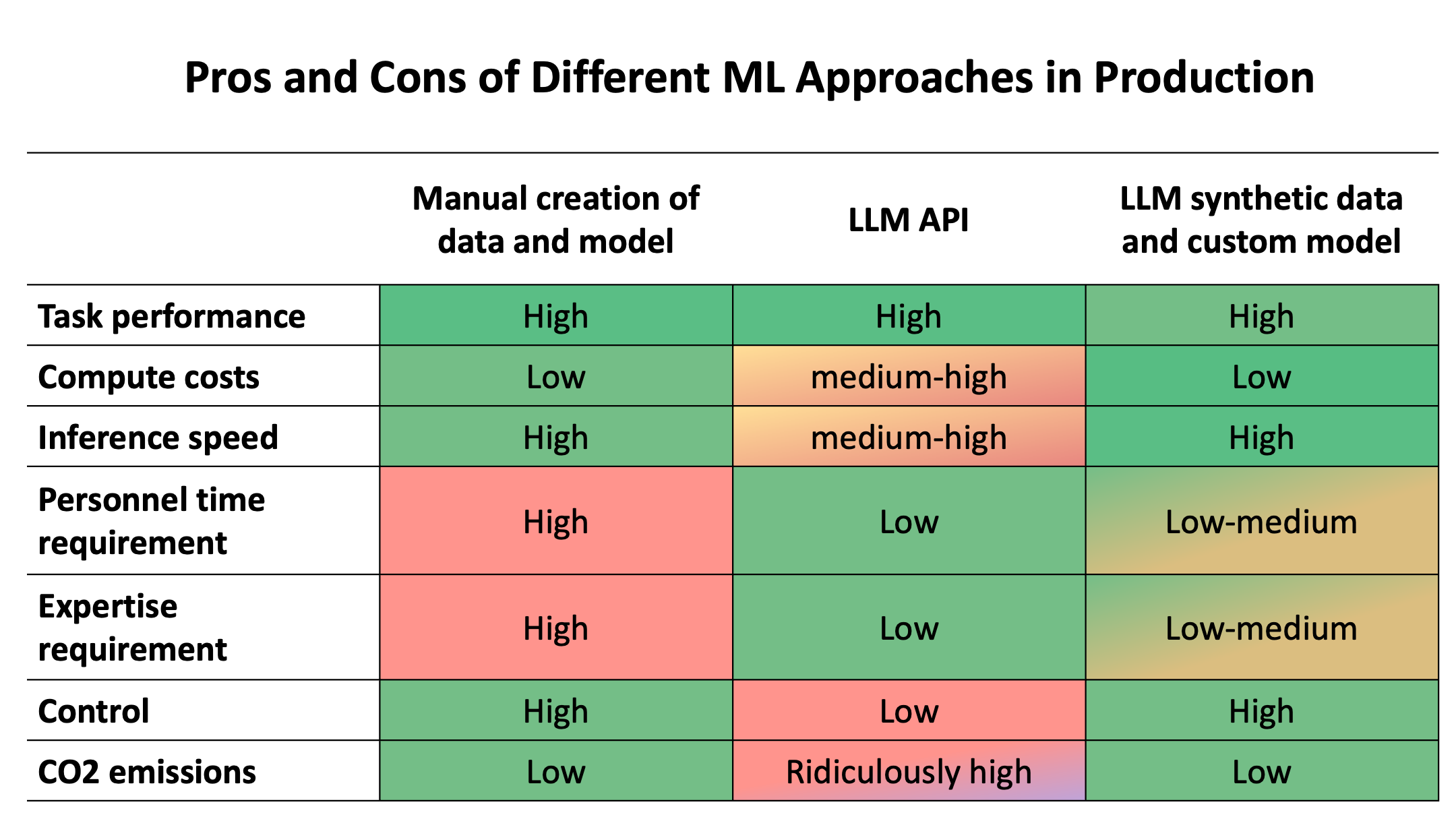

Synthetic Annotation

Source: Moritz Laurer on HF Blog

Synthetic Annotation

- Use LLM to annotate training data

- Generate synthetic labels

- Train smaller encoder model on synthetic data

- Evaluate on gold standard

- Apply cost-efficient at scale

Zero-shot encoder models

Laurer et al. (2024)

- Task: natural-language inference (NLI) - universal

- Allow prompting

- Controlled output

- Class probabilities

- Efficient

Try this before using generative models

How does it work?

Laurer et al. (2024), Table 1

How does it work?

- Class-hypotheses: “It is about economy”, “it is about democracy”, …

- E.g. “We need to raise tariffs” as context

- Test each of the class-hypotheses against this context

- Probabilities for entailment and contradiction are converted to label probabilities

Tutorial I

LLM inference and prompting

Hosting Models & Calling APIs

HF Inference Endpoints

Local Hosting

Ollama

Azure

OpenAI

Tutorial II

API calls, Structured Output

Resources

Laurer, Moritz, Wouter Van Atteveldt, Andreu Casas, and Kasper Welbers. 2024. “Less Annotating, More Classifying: Addressing the Data Scarcity Issue of Supervised Machine Learning with Deep Transfer Learning and Bert-Nli.” Political Analysis 32 (1): 84–100.

Tunstall, Lewis, Leandro Von Werra, and Thomas Wolf. 2022. Natural Language Processing with Transformers. " O’Reilly Media, Inc.".

Social Science applications